Currently Cyber Insurance covers first party damage in case of any data breach. This covers cost of recovery of lost data, legal and forensic costs and perhaps some consequential damages such as third party liability claims.

In the post DPDPA scenario, there is a concern about the cost of the Administrative fine which could be substantial. It is a grey area whether this fine if any can be insured.

By the nature of the fine, it is levied because of the non compliance of law besides other reasons such as causing harm to the data principal. It is therefore difficult to provide coverage since in principle, insurance cannot protect and reward non compliance of law.

However, in most cases when fines are levied, the data fiduciary may claim compliance and it would be a matter of the regulator not agreeing that the measures taken were adequate enough. It would be a matter of debate whether there was “Reasonable” measures and “Due Diligence” on the part of the data fiduciary. It is possible that a breach was attributable to the action of a third party despite reasonable measures taken by the data fiduciary for compliance in good faith. It is like an automobile accident which occurs despite careful driving and not because of blatant violation of law such as driving in the one way street in the opposite direction or driving in a drunken state.

If automobile insurance as well as the law for punishment to drivers for rash and negligent driving can distinguish between what is rash and negligent and what is not, should there be a similar discussion on the fines levied for DPDPA non compliance?

In most cases, the order of the regulatory authority may specify the root cause and whether there was gross negligence or lack of food faith in the incident on the part of the data fiduciary. If so, should a “DPDPA Liability Insurance Policy” cover not only the cost of conducting investigation, legal defence , meeting the liability to the data principals but also the administrative fine (may be subject to a sub limit)?

The insurance industry needs to ponder over this.

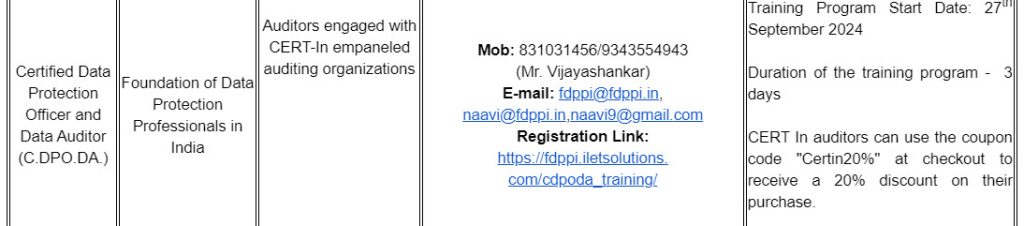

On the part of Auditors FDPPI would like to offer

a) An Assessment of DPDPA readiness for an Insurance company to accept an insurance proposal

b) An assessment of DPDPA penalty liability when an incident occurs or an inquiry is ordered by the Data Protection Board.

These assessments can be structured for the needs of the Insurer and conducted at the instance of the insurance company.

They may be different from the assessment made as “DPDPA Gap Assessment” or “DPDPA Compliance implementation Assistance”.