The new year resolution that FDPPI is pursuing for 2025 is to further promote the urgency for DPDPA Compliance during the year.

Towards this direction, FDPPI continues to

a) Build Awareness

b) Build Expertise

c) Provide the framework for compliance

d) Collaborate with PET developers

Currently there are lots of activities by different individuals and organizations about creating awareness of DPDPA. We welcome all these initiatives though there could be some differences of views on some aspects of the law here and there. Essentially the differences may come because other professionals may still be under the influence of the GDPR while we try to have an independent Jurisprudential view on different aspects of law.

Whether it is the definition of what is “Personal Data” , How to identify the “Significant Data Fiduciary”, How to work on the rights of Grievance redressal and Nomination, or Data Monetization, FDPPI may have a slightly different view than some of the other professionals.

However, FDPPI welcomes the efforts of all community leaders in making “Data Privacy” a buzz word in the industry.

FDPPI now focusses on the next generation of work which is the enabling of implementation through the suggested DGPSI framework which can be used for implementation as well as third party audit and certification.

When a new thought like DGPSI comes to the market, there will be many who will continue to stick by the old practices…. and say “You should have done what others have done for years”.

It is time to leave such advisors to the past and move ahead with DGPSI. The Birla Opus paint advertisement provides a similar message which describes exactly the sentiments I echo on DGPSI vs other frameworks.

DGPSI is an implementation framework that focusses on compliance of DPDPA. It has some revolutionary thoughts related to data classification, process based compliance, distributed responsibility, data monetization etc. In the past few months we are already seeing that some of the practitioners of other frameworks shifting their stand and saying this is also our view and can be implemented in the current framework as well. I welcome such softening of the stand on DGPSI and look forward to them adopting DGPSI as a whole or incorporate its principles within the other frameworks they would like to stand by.

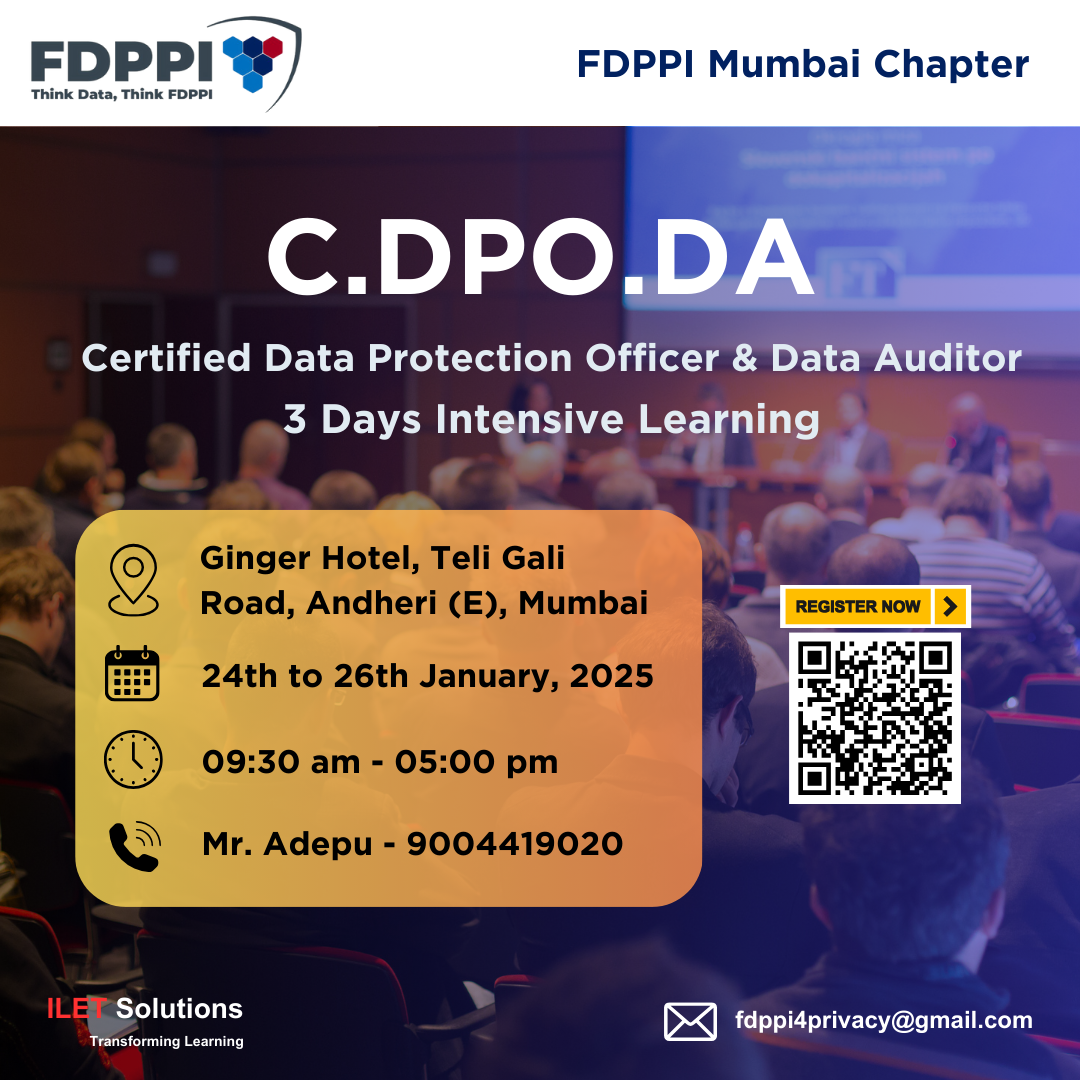

We intend discussing this concept of DGPSI as a framework for DPDPA compliance in depth during the three day workshop at Mumbai on January 24, 25 and 26.

Contact today to register yourself. This could be a turning point in the career of all ISMS auditors who would like to become a DPDPA Auditor.

Say No to dogmas and yes to the new generation framework of DGPSI.

Naavi