Naavi Academy has started a series of videos explaining DGPSI as a framework for compliance of DPDPA.

Here is an introductory video:

Naavi Academy has started a series of videos explaining DGPSI as a framework for compliance of DPDPA.

Here is an introductory video:

Yesterday we started a new round of discussion advocating the need to modify the well known CIA triad approach to Information Security to add “Preservation of Value of Data”. While all data has a value, the proposed concept of V & V was central to the security of Personal Data where there was a need to protect the personal data in such a manner that there would be a reduction of Risk of penalty under the Data Protection regulations.

Let us try to explore this further.

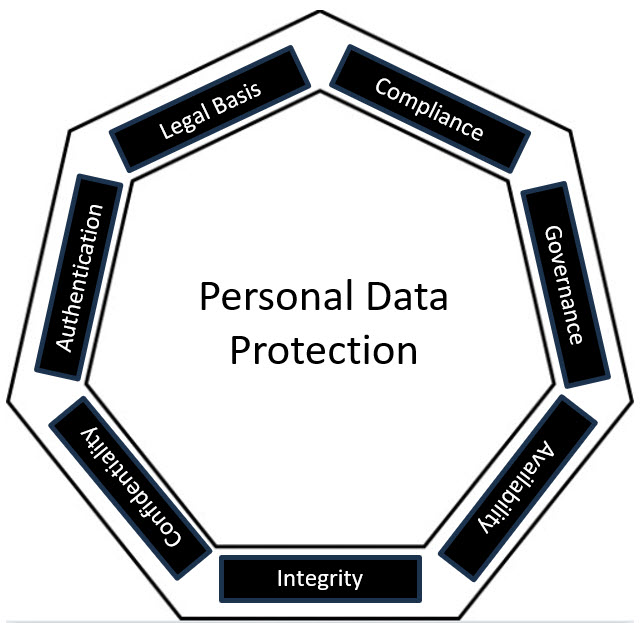

When I published the book “Guardians of Privacy…a comprehensive handbook on DPDPA 2023 and DGPSI” which I suppose some of you must have read, I had published a Security

approach (Page 210) in the form of a “Septagon” as follows.

This was an upgradation from the “Security Pentagon which I had proposed much earlier as part of the Theory of Information Security Motivation and had included the requirements of Privacy through the “Governance”, “Compliance” and “Legal Basis” aspects in replacement of “Non Repudiability” which was included in the “Authentication” itself.

These seven boundaries of Personal Data Protection represented the requirements of protecting the Personal Data in the current generation of Data Protection laws much better than the CIA concept which was used earlier by the community.

While the “Legal basis” and “Compliance” include the “Privacy Concepts’, the “Governance” includes the concepts such as Recognition and preservation of the value of data and other aspects such as Distributed Responsibility or concepts such as “Data is created by technology but interpreted by humans”, which are not today part of Compliance but are considered essential for implementation of DGPSI framework.

The mod CIA V&V concept is therefore another expression of this personal data security pentagon. While “Governance” represents the first V in CIA V&V, “Compliance” represents the second “V”.

If we had used the acronym of the parameters used in the security pentagon, we would have arrived at CIA-ALCG as an extension of the familiar CIA. The CIA in CIA-ALCG is of course used as “Modified CIA” as explained in the article yesterday.

It is time that we shift our Information Security focus from CIA to CIA-ALCG as we migrate from “Information Security” to “Personal Data Security”. This would be also applicable where the context is security of both personal and non personal data.

Yes, I am once again challenging the age old ISO concept much to the discomfort of some professionals who are having a role set problem as ISO auditors. But this is inevitable as the society moves from Information Security of all Data as one objective to Information Security under ITA 2000 and Personal Data Security under DPDPA 2023 (and other laws) as an objective of protecting personal and non personal data together in an organization. It is for the same reason that I repeatedly hold that ISO 27001 is necessary but not sufficient for Personal Data Protection and we need to implement DGPSI instead as the framework of choice.

Request for comments from professionals.

CIA is a well known security concept which defines the Information Security Frameworks such as ISO 27001 and many others.

In the context of ISMS objectives requiring to be modified for Personal Data security based on the Data Protection laws, there is a need to redefine Information security from as “Preservation of Confidentiality, Integrity, Availability and also the Value of Data”. The Core concepts of Confidentiality, Integrity and Availability themselves need to be modified and we need to adopt a ModC, Mod I and Mod A, in the place of CIA. To this we need to add the value perception.

The Value perception itself needs to be looked at from two angles namely the “Value of Data” because of the cost or market value and also the need to preserve erosion of value by in adequate Governance measures leading to penalties under the laws.

Hence CIA Triad needs to be upgraded to Mod-CIA V&V. The reason why CIA has to be modified in the Data Protection context can be briefly explained below.

The reason is, while “C” in ISMS reflects data access Control which the IS department decides on the basis of “Role Based Access”, in the Data Privacy context the fixing of Data access controls reflect the permissions given by the Data Principal based on the purpose of processing of personal data.

Similarly the “I” in the IS context reflects the need to ensure accuracy of data in the interest of the organization, in the DPDPA context, “Maintenance” of accuracy, completeness and consistency is related to whether the processed data is meant for disclosure or decision making.

Also “A” in DPDPA scenario depends on the exercising of “Rights” under the law rather than the denial of access possibility.

Apart from the modified CIA concept, the context of Personal Data Governance under DPDPA Compliance also recognizes the financial value of data represented by the V in the new concept.

The value itself can be looked at from the perspective of the revenue generative potential which is V+ while the opportunity cost of in adequate compliance results in a financial loss which is represented by V-.

Thus the CIA Triad needs to be expanded to five elements of Personal data security namely the Modified Confidentiality, Modified Integrity, Modified Availability, Value preservation and Prevention of Value loss through penalties.

Currently, DGPSI Full version does take into account all the five elements and hopefully this practice will gain acceptability in due course in the industry.

I started writing this blog based on the news reports that appeared mainly on TV channels and the digital copies of the preint media. The reports suggested that “X” (formerly Twitter) had filed a petition in Karnataka High Court against the Union of India alleging that the use of Section 79(3) on “Intermediaries” to get content removed is a violation of the freedom of expression.

Media made it look as if it was Elon Musk fighting against Indian Government.

Refer reports at Deccan Herald, Hindustan Times, Business Standard

You Tube: Times now : India Today : CNBC-TV 18

Thanks to one of my friends in the media, I got a copy of the petition in the night and as I read through the petition, it was clear that the essence of the objection raised was not about the power of the Government to remove the content which was damaging for India, but the due process that needs to be followed in such cases. It appeared that it was meant to teach a lesson to the Government officials who did not understand ITA 2000 even after 25 years and continued to issue notifications with their own interpretations which were wrong ab-initio.

As a person who has been in the filed of Cyber Law education since 2000, I am ashamed that so much ignorance persists even in the Government and the set of legal advisors in Delhi who advise MeitY. Had Meity Contacted a better ITA 2000 aware persons anywhere in the country, they would not have met this embarrassment of being called “Ignorant” of ITA 2000.

At first glance from the news paper reports, it appeared that X has built its petition under a wrong interpretation of Section 79(3), without appreciating that this section can only issue notice to disable the safe harbour protection and cannot mandate content take down.

However, on a detailed perusal of the petition, it was clear that the petitioner was actually educating MeitY, MHA and the Ministry of Railways and pointing out the several mistakes made by them in issuing orders to X.com. I therefore think that the petitioner’s law firm must have enjoyed this exercise of drafting a petition that was meant to highlight the difference of two sections of ITA 2000 namely Section 69A and Section 79. This could be a great case study for students of law.

The impugned petition is based on the take down notice issued by the Ministry of Railways which is a demonstration of how not to interpret the law and how not to issue a notice of such nature.

On 11th February 2025, the Ministry of Railways had issued a mandate to X.com to remove some content within 36 hours of the “Issue of the communication”. (Not from the time of receipt). This order was issued under the earlier gazette notification issued by MeitY on 24th December 2024 which notified the Executive Director of Railways as an authority for the purpose of issuing notice to the intermediaries in relation to “any” information which is prohibited under any law for the time being in force pertaining to the Ministry of Railways and its attached offices.

The MeitY Gazette notification which designated the Executive Director as the authority for taking down content was wrongly quoting Section 79(3) instead of Section 69A as its legal basis.

Further, the order was conveyed through an email from the Deputy Director Public Relations of Railway Board and not the Executive Director Directly. The email was from Gmail domain and was obviously not digitally signed also.

The order conveyed to Twitter appears to have demanded that “…links be taken down immediately”.

Any prudent legal advisor would have suggested that it was preferrable in such cases to state something to the effect…..

” It is hereby notified that the content referred to in the links quoted in the annexure are false and misleading and if it is not removed as per Section 79(3) of ITA 2000, the platform shall be considered ineligible for protection under the said section and we reserve the right to proceed against the platform as per the laws of the land”.

As a result of these multiple childish mistakes, the order can be used to argue as an example of how the Ministries by their ignorance may misuse the provisions.

Because of the mistakes committed , a ground has been given to the petitioner that there is an attempt to bypass due process of law under Section 69A and it would be challenging to defend against this accusation.

I would like to advise the Government that instead of trying to defend this petition, it should immediately withdraw the earlier notices, issue modified notices duly drafted and file an affidavit in the Court so that the Court can dismiss the petition at the first stage itself as redundant.

If the case is pursued and Court takes a stand in favour of the petitioner, the media would project a wrong impression that Government does not have the power to regulate content under ITA 2000 and the entire Intermediary Guidelines which is under challenge in Delhi High Court would also be jeopardised.

I am apprehensive that this could even lead to the Court even scrapping Section 79(3) as it happened in the faulty judgement in the Shreya Singhal Case .

It is therefore advisable for the MeitY to admit in its affidavit that it inadvertently failed to follow certain procedures and would take appropriate steps to handle such instances properly in future.

The Sahyog Portal is just a facilitation of communication and we hope it has no role to play on the wrongful process used in this particular instance. If necessary, corrections could be applied to the statements made on the portal also.

I would also advise “X” to accept the admission of inadvertent error in using the process (if given) and not stand on its own ego since a proper trial would also raise the question whether X is an Intermediary under ITA 2000 and whether it is entitled to any protection at all.

In this era of AI and data analytics, no social media can fulfil the conditions mentioned under section 79(2)(b) of ITA 2000 and neither X nor Face Book nor Instagram can avail the benefits of Section 79 despite the earlier wrong interpretations.

If Karnataka High Court looks at this angle of “Who is an Intermediary”, then another new chapter would be written in the Cyber Jurisprudence in the country. In case the petition goes into detailed trial, I wish the high Court uses this opportunity to focus on the concept of an “Intermediary” and why under the current practices of the Social media platforms to read though all messages, filter it, use it for machine learning etc., they can never be considered as mere conduits of messages which is essential for them to be considered as an “Intermediary”.

If X is held as “Not an intermediary”, then Government does not need either Section 79 nor Section 69A to launch proceedings against social media platforms violating any provisions of BNS 2023. This would be an unintentional backlash that X would suffer as a petitioner in this case.

The petition itself is doing a great service to the community of Cyber Law educators and is welcome since it has thrown up an opportunity to educate the Government of India about it’s own law which some body like Naavi could have explained in one single sitting. Meity could have even contacted Padma Shri Dr T.K.Vishwanathan who drafted ITA 2000 who was nearby.

I request the Karnataka High Court also to limit it’s order to the scope of educating the Government on what is the scope of Section 79 vis-à-vis Section 69A and not jump to the actions like scrapping the section like what Supreme Court erroneously did in the case of the Shreya Singhal for which we have been calling out again and again in the last one decade that Supreme Court also did not understand the law before it scrapped Section 66A.

I should also express my appreciation of M/s Poovayya @Co for giving a lesson to the Government through their petition on the scope of Section 79 and Section 69A.

Naavi

P.S: This article is meant for education of Cyber law students. It has undergone an extensive edit from the time I started writing last night to this morning when I edited it further. Kindly ignore the earlier versions if you had an occasion to view it before the edit…. Naavi)

After Mr Trump took over as President of USA, we have been anticipating some changes in the Data Protection regime specially related to HIPAA/HITECH Act and the EU-US Data transfer.

The DOGE activity will sooner or later catch up with the operations of Medicaid and Medicare programs which were the favourites during the Obama regime and this could affect some changes in the HIPAA/HITECH regulations. However, this has not happened and we are waiting for the NPRM to be finalized.

In the meantime, US and EU are under loggerheads politically and this could affect the EU-US data transfer regime which can have an impact on India also.

The trigger for this seems to have been noticed now in a decision to reconstitute the FTC’s five member bench with removal of two Democratic commissioners has left the Commission with two Republican nominees without representation from the minority parties.

The EU has been demanding in the past that US judicial system adopts itself to GDPR regulations and provide two guarantees namely

There was an uneasy truce on this aspect in the previous negotiations leading to the current EU-US Data Transfer Framework. This is likely to be disturbed by the recent developments particularly since the two removed commissioners are Democratic party representatives with a clout in the EU administration.

Soon this is likely to raise a demand for cancellation of the Data Transfer arrangement and consequential business disruptions.

India receives a lot of Data Processing business from EU through US Data Controllers. Now this could be affected if the EU-US data transfer agreement gets suspended or otherwise disrupted. It is interesting that at the same time, Indian DPDPA is also coming into operation. Will the Indian business take advantage of the EU-US differences and establish more direct business with the EU Data Controllers under GDPR is worth watching out.

Indian DPDPA is flexible and provides setting up of notified Data processing centers for processing EU data under a GDPR Contract by an Indian Data Processor with an exemption of DPDPA. (ITA 2000 however is not exempted). Hopefully, innovative data processors in India will take advantage of the notification of DPDPA to increase their business share with EU.