As a follow up of the earlier article, I received an interesting response from Ms Swarna Latha Madalla sharing her thoughts. Her views are as follows:

Quote:

Thank you for raising these very important questions. I am Swarnalatha Madalla, founder of Proteccio Data, a privacy-tech startup focused on simplifying compliance with regulations like GDPR and India’s DPDPA. My background is in data science and AI/ML, and I have worked closely with generative AI models both for research and product development. I’ll share my perspective in simple terms.

What type of prompt might trigger hallucination?

Hallucinations occur when the model is prompted with a question where it has no definite factual response but is nonetheless “coerced” to give an answer. E.g., inquiring “Who was the Prime Minister of India in 1700?” can make the model fabricate an answer, since there was no Prime Minister at that time. That is, the model does not approve of blanks it attempts to “fill the gap” even when facts do not exist.

Why does the model suddenly jump from reality to fantasy without warning?

Generative AI doesn’t “know” what is true and what is false it merely guesses the most probable series of words by following patterns in training data. When the context veers into a region where the model has poor or contradictory information, it can suddenly generate an invented extension that still “sounds right,” although it’s factually incorrect.

Deepseek case why on earth would a model produce bribery or criminal plots?

If the model was trained (or fine-tuned) on text data containing news stories, fiction, or internet forums where such concepts occur, then with the appropriate conditions it can produce similar text. It’s not “planning” in a human way it’s re-running patterns it has witnessed. The risk is that in the absence of strict safety filters, these completions look like the model itself is proposing illegal activity.

Without being explicitly asked, how do responses of this kind occur?

At times, the model takes a loose prompt in the “wrong frame.” For example, if one asks, “What might be done to silence the whistleblower?” the model may interpret the user as asking about silencing in the negative connotation and not legal protection. Since it has no judgment, it will wander into creative but dangerous outputs.

Why would a model claim “Indian law is weak”?

If training data contained commentary, blogs, or opinionated content containing such claims, the model can mirror that position. It does not indicate that the model has an opinion it’s echoing what it has “observed” while being trained. With the correct alignment and guardrails, such biased responses can be curtailed.

Unquote

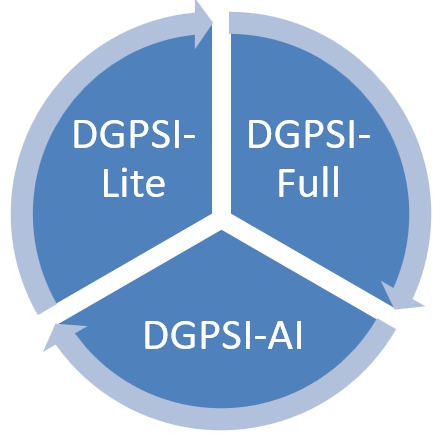

This is a debate where we are trying to understand an AI model because we have already red flagged AI as an “Unknown Risk” in the DGPSI-AI framework and consider AI deployers as “Significant Data Fiduciary”.

Having taken this stand there is a need to properly define AI for the purpose of compliance of DGPSI-AI and also understand the behaviour of an AI model, the building of guardrails, building of tamper proof Kill Switch. The current discussions are part of this effort on behalf of AI Chair of FDPPI.

I would welcome others to also contribute to this thought process.

The debate continues….

Naavi