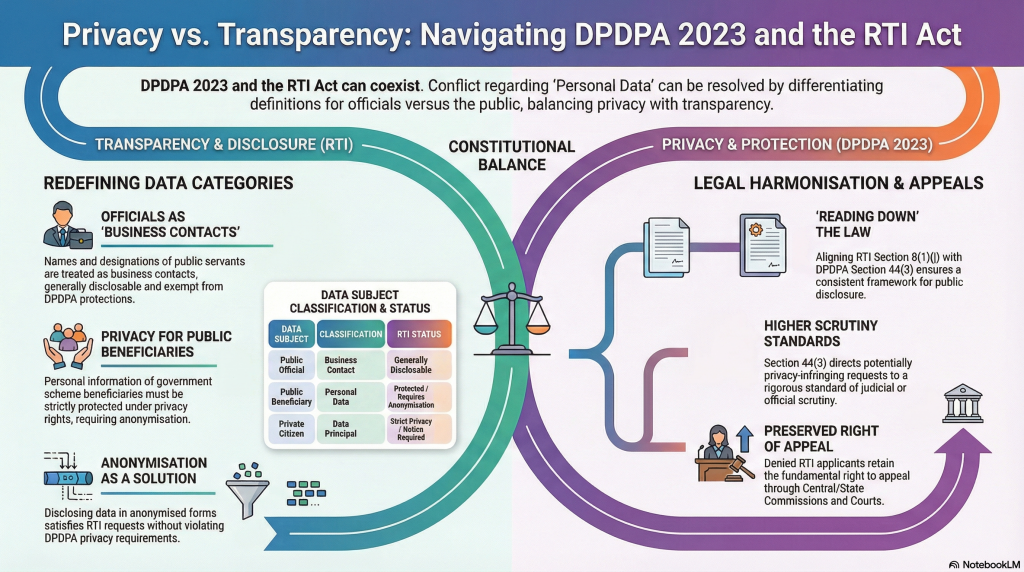

The petition of Mr Venkatesh Nayak against DPDPA was restrained in praying only for Section 44(3) removal and a few other sections, which we have discussed in detail in the previous series of articles.

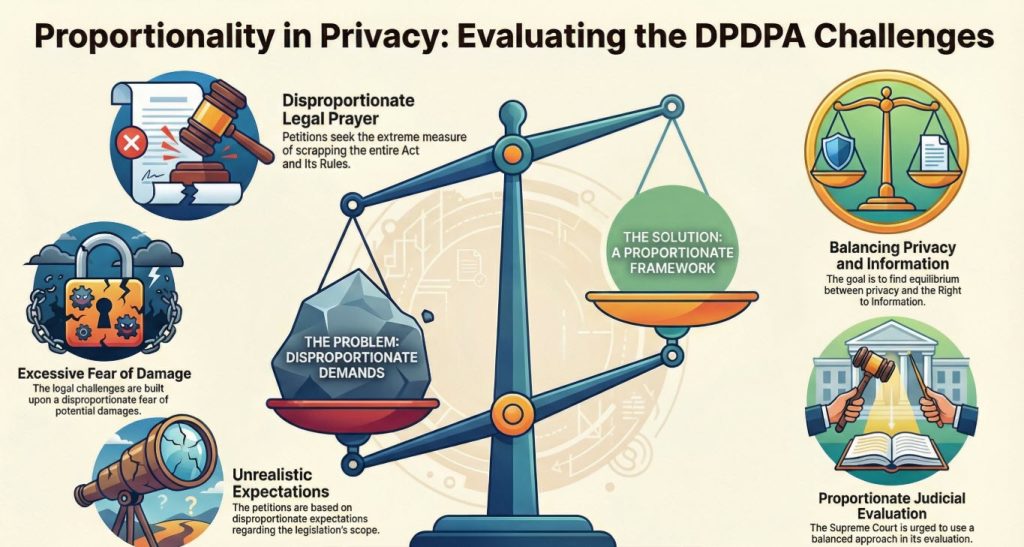

In comparison, the petition of the Reporter’s Collective Trust and Mr Nitin Sethi is conspicuous with its summary demand for declaring the whole of DPDPA 2023 and the whole of the Rules as void.

The demand is ridiculously excessive and indicates no intention of real concern on public interest but reflects only the anti Government agenda to stop whatever good can happen. It is difficult to understand how petitioners call themselves as supporters of Privacy when they are trying to dismantle the very law meant to protect privacy.

We all know that no law is perfect. Some times laws need to be explained through the rules and even amended in a short time. In a complicated law like DPDPA which seeks to balance multiple rights under the constitution, differences are inevitable and we should learn to manage them rather than try to scuttle the law itself.

Wisemen warn “Don’t Cutoff your nose if you have Cold”. Unfortunatey the petitioners who want the act to be scrapped because of some disaagreements have not heard of this proverb.

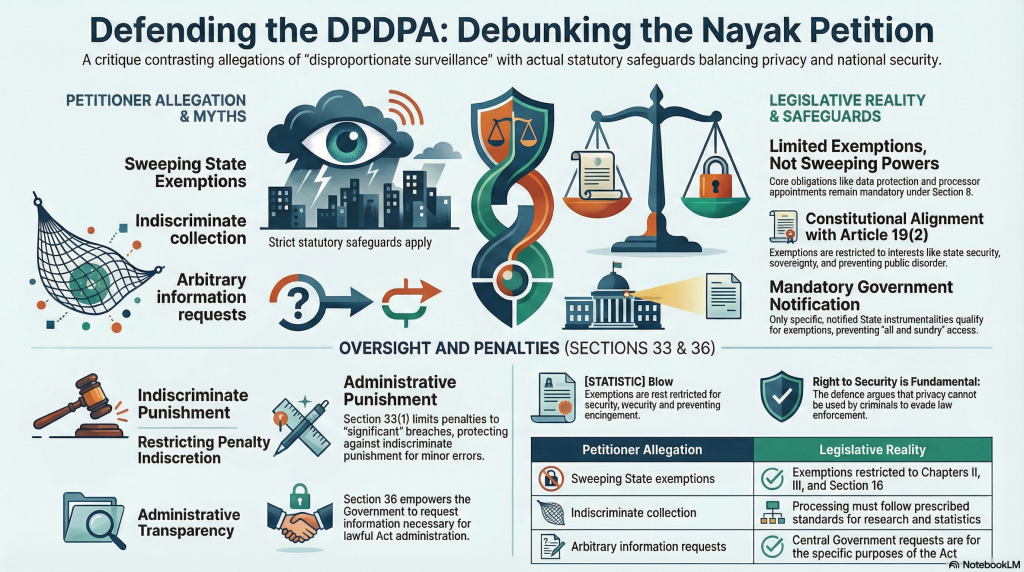

This petition has highlighted the following concerns/view points that can be contested..

- Right to Information is a fundamental right under Article 19(1)(a) as per earlier Supreme Court judgements.

- Right to information is essential for carying out the function of a Journalist and the Act does not provide exemption for journalists.

- Amendment in Section 44(3) has no legitimate aim under Article 19(2)

- Proposed amendment interfers with the social audits that a journalist wants to conduct

- DPDPA applies only to digital information where as RTI applies to all kinds of records and hence DPDPA provision is unreasonable.

- Disclosure under Section 8(2) of RTI act is discretionary and 8(1)(j) offers a better standard.

- K S puttaswamy judgement should not apply to public purpose activities including journalism.

- Exemption for research under Section 17(2)(b) is not applicable to journalistic reports

- Section 12 mandates immediate deletion on withdrawal of consent, evidence of a journalistic report may be not available for post facto validation.

- Whole of the Act and the Rules are void for “Vagueness”.

- Though Section 17(5) provides for a provision for exemption, Central Government does not have powers to exempt for journalistic purpose.

- Government calling for information from a data fiduciary is violative of the constitution and gives raise to “Potential for Abuse”.

- Even when a disclosure of personal information is prejudicial to the sovereignty and integrity of India, it cannot be prevented from being released under RTI.

- Section 36 enables “Unreasonable data searches” and hence against the Puttaswamy judgement

- Because the Central Government has a range of less inrusive alternatives including obtaining independent authorization from a Court, there is no need for Section 36.

- Data Protection Board lacks independence

- DPB functioning as a digital office is exclusionary.

- Penalties from Rs 50 crores to Rs 250 crores are exaggeratry.

We appreciate the ingenuity of the petitioners in picking out very many points out of the act and the rules to be objected to, there are umpteen contradictions within the petition. In some cases they swear by the Puttaswamy judgement and in somce cases they want it to be violated.

The net impression is that this petitioner does not tolerate the existence of the Government itself and does not want the Government to have any powers of Governance. They respect Puttaswamy judgment but want the Act to be scrapped. The argument are highly speculative and does not merit even basic consideration.

The only point they make is “Journalism should have some exemptions”. They admit that the act has the power of exemption but still claim that Government does not have the power. The petitioners are confused about what they want and express it with clarity.

This petition deserves to be rejected with a directive to correct and resubmit making it more specific, avoiding self contradictions.

We will continue our discussions on some of the individual points and highlight the contradictions.

Naavi