FDPPI is a “Not for Profit” organization by the professionals and for the professionals and always believes in providing more than value for money in its programs.

Since the registered participants are senior pros, we need to accommodate more discussions during the two day training program for C.DPO.DA. on November 1 and 2. Hence it has been decided to provide some background videos on DPDPA, DPDPA Rules as well as GDPR.

When the new DPDPA Rules are released, there will be a separate session on the rules online which could be a three hour session on a Sunday .

In order to further provide post training engagement, all the participants will be provided with one year complimentary membership of FDPPI worth Rs 6000/-.

Additionally, from out of the participants FDPPI will create two Special Interest Groups one on the New DPDPA Rules so that the Group could identify the pain points related to different sectors and create documents that can be shared with the DPB and MeitY, and the second on evaluation of the Data Discovery, Classification and Consent Management software available for Data Fiduciaries with reference to DPDPA requirements and generate customization guidelines for the Data Fiduciaries.

With this unique approach, the C.DPO.DA. program of FDPPI will be unique and bring more value.

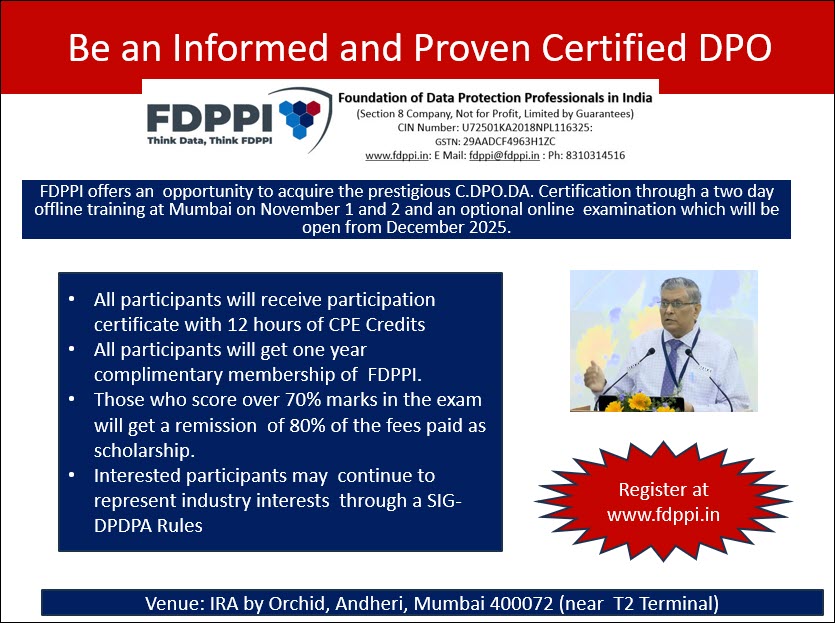

Details of the program are available below at with registration at www.fdppi.in

Naavi