For the attention of the Indian AI Committee headed by Dr Balaraman, Professor, IIT Madras. Since the Committee is not aware of the DGPSI-AI framework developed voluntarily on behalf of the Indian industry, it is our duty to present some of the salient features of this framework and how it addresses the AI related risk of deployers and indirectly the AI Governance requirements of the Developers. We are aware that the report has already been finalized and released but it is necessary to place before the august committee members what they deliberately did not want to refer in their reports for hurting the vested interests.

DGPSI-AI is a framework that extends the DGPSI framework meant for DPDPA Compliance. DGPSI Framework is guided by 12 principles and 50 implementation specifications that cover the compliance of DPDPA 2023, ITA 2000 (For DPDPA Protected Data or DPD), and relevant aspects of Consumer Protection Act, Telecom Act,, BNS, BSA and BIS draft guidelines on Personal Data Governance and Protection. DGPSI-AI is an extension for AI environment in the Data Fiduciary environment and consists of six principles and Nine implementation specifications. We shall now discuss only this framework.

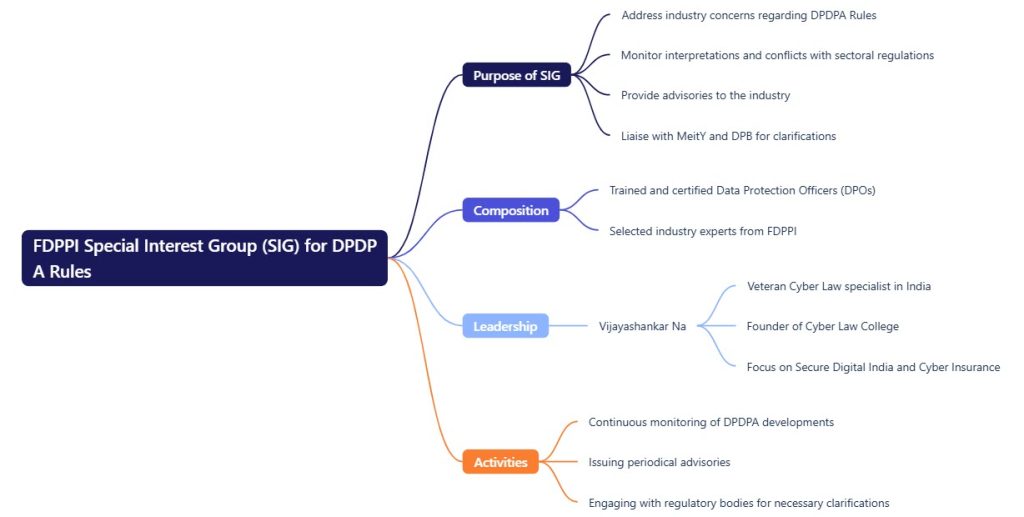

In the next week or so, we are conducting an open virtual conference on DGPSI and DGPSI-AI and the Committee members are invited to participate for a detailed discussion where we will highlight how DGPSI covers the ISO 27701:2025 requirements completely. The Committee and the Meity should understand that these frameworks can singularly replace the need for compliance with host of ISO standards which are only Best Practice Standards and not meant for DPDPA compliance. In the AI scenario also the IAIG refer to 26 ISO standards indirectly hinting that Indian companies have to look for their compliance. We want this mindset of dependence only on ISO standards to the exclusion of any attempt for developing Indian standards to be changed. Let us leave the colonial mindset that anything which comes from the west is good and have confidence in the Made in India concept of Mr Modi.

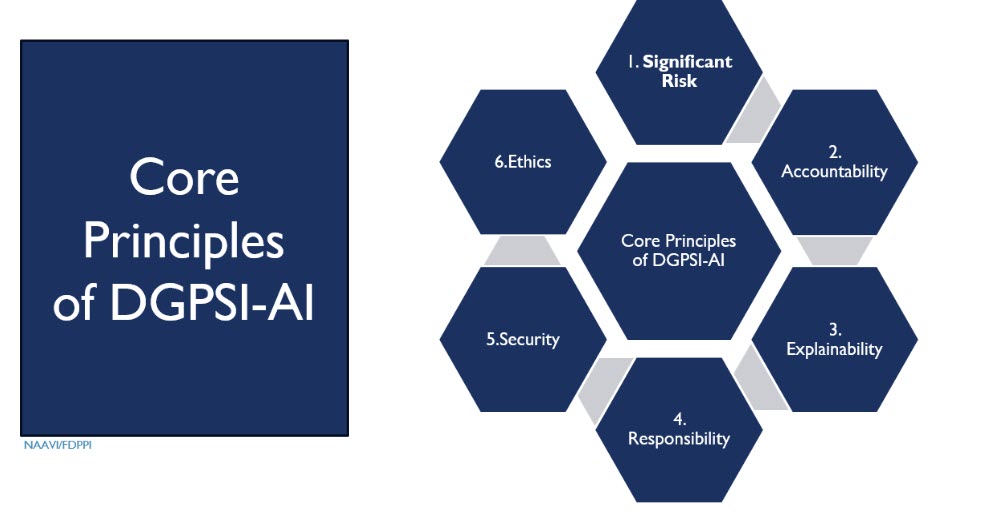

Let us now look at the Six Principles of DGPSI AI depicted below.

While we leave the detailed discussion to the book which is available on Kindle (Should be available on print directly from Naavi or when the publisher White Falcon Publishing wakes up from its deep slumber).

Now let us look at the Seven Sutras of IAIG.

The DGPSI-AI is built on Accountability, Explainability, Responsibility and Ethics which are meant to build “Trust” which is the declared objective of IAIG. Explainability of DGPSI-AI can also be mapped to “Understanding by Design” in IAIG. Accountability is covered in both. Safety in IAIG is covered under Security in DGPSI-AI. Resilience and Sustainability and People first concepts of IAIG is covered under the principle of “Ethics” under DGPSI AI.

Where the IAIG tilts towards the industry interests is in the concept of ” Innovation over Restraint”. DGPSI-AI prefers “Innovation with Restraint” and flags the “Unknown Risk” associated with AI usage as a “Significant Risk”. By explaining the Restraint concept as “All things being equal, Responsible Innovation is prioritized over cautionary restraint”, the IAIG tilts towards industry benefits than being “Data Principal Specific”.

Since under DPDPA, the Data Fiduciary is a trustee of the Data Principal, he cannot prioritize industry benefits over the risks to the data principal. Hence DGPSI-AI is a shade better than the IAIG in this respect.

Your comments are welcome… More comments will follow.

Naavi