When we discuss Artificial Intelligence and Machine Learning, we often try to draw similarities between how a human brain learns and how the AI algorithm can learn. We are also aware that AI can exhibit a rogue behaviour (Refer the video on ChatGPT below) and could be a threat to the human race. As the Video demonstrates, Chat GPT has both Dr Jekyll and Mr Hyde within itself and through appropriate Prompting, user can evoke either the good response or the bad response.

Based on this, there is one school of thought that suggests that Chat GPT or any other AI is just a tool and whether it is good or bad depends on the person who uses it. In other words the behaviour of the AI not only depends on what is the inherent nature of AI and what prompts it receives from outside. In the case of Chat GPT, prompts come in the form of text fed into the software. But in the case of a robot, it comes from the sensors attached to thee robot which may try to replicate the sense of vision, hearing, touch, smell or taste or any other sixth sense that we may find in future which can present itself in machines even if not available in humans.

I recall that the power of human mind if properly channelled often exhibits miraculous powers of strength or smell or hearing. We are aware that a surgery can be made on a person under hypnosis without anastasia or the body being made as rigid as steel or demonstrate a heightened sense of smell like a dog, imparted to a person under hypnosis.

Hypnotism as a subject itself leading to age regression and super powers is a different topic but it exhibits that human brain is endowed with lot more capabilities than we realize.

The neuro surgeons in future may not stop at merely curing the deficiencies of brain but also impart a super human power to the human brain and we need to discuss this as part of Neurorights regulation.

In the meantime, we need to appreciate that an AI created as a replica of human brain may be able to surpass the average performance level of human brain and in such state it is not just “Sentient” but is a super human. One easy example of this super human capability is the ability to remember things indefinitely.

The theory of sub concious mind and hypnosis being able to activate the sub concious mind is known. But otherwise normal humans do experience “Forgetting” and as they age the neuron activity may malfunction. The AI however may hold memory and recall it without any erosion. It is as if it is operating in a state similar to a human where the conscious and subconscious minds are both working simultaneously.

Concept of AI Abuse

When we look at regulations for AI we need to also ask the philosophical question of whether we can regulate the “Upbringing” by parents. May be we do so and treat some behaviour of parents as “Child Abuse” and regulate it.

We need to start debating if “AI abuse” can be similarly regulated and that is what we are looking at in the form of AI Ethics.

Looking at AI as a software that works on “Binary instructions” that interact with a “Code Reading device” to make it change the behaviour of a computer screen or a speaker etc and regulating this as an induced computer behaviour, is one traditional way of looking at regulations affecting AI.

In this perspective, the behaviour of an AI is attributed to the owner of the system (Section 11 of ITA 2000) and any regulation coming through linking looks sufficient.

However the world at large is today discussing the “Sentient” status of AI as well as “Feelings” or “Bias” in machine learning, considering the AI as a “Juridical person” etc.

I am OK-You are OK principle for AI

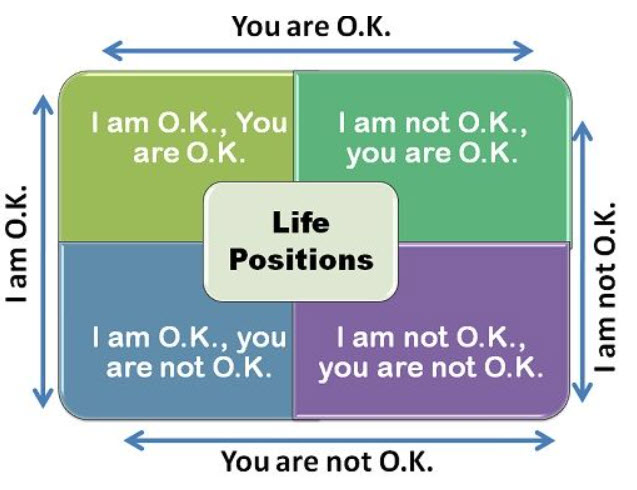

While one school of thought supports this theory that AI can be “Sentient”, and therefore AI algorithm should be considered as a “Juridical person”, there is a scope for debating if in the process we need to understand why an AI behaves in a particular manner and whether there is any relationship between the behaviour of these high end AI and the behavioural theories that Persons like Eric Berne or Thomas Harris had propounded for human behavioural analysis.

I am not sure if this thought has surfaced elsewhere in the world, but even if this is the first time that this thought has emerged into the open, there is scope for further research by the behavioural theorists and the AI developers. May be in the process we will find some guidance to think of AI regulation and Neuro Rights regulation.

To start with, let us look at the development of “Bias” in AI. We normally associate it with the deficiencies of the training data. However we must appreciate that even amongst humans we do have persons with a “Bias”. We live with them as part of our employee force or as friends.

Just as a bad upbringing by parents make some individuals turn out to be bad, bad training could make an AI biased. Some times the environment turns a bad person into good and a good person into bad. Similarly a good Chat GPT can be converted into a rogue ChatGPT by malicious prompting. I am not sure if the AI which has been created as capable of responding to both good and bad prompts, can be reined in by the user through his prompts to adopt some self regulatory ethical principles. Experts in AI can respond to this.

While the creator of AI can try to introduce some ethical boundaries and ensure that motivated prompting by a user does not break the ethical code, whether this can be mandated by law as need to create AI of the “I am OK-You are OK” types rather than “I am Ok- you are not Ok” types. or “I am not OK-You are not OK types”.

If so, either as ethics or law, the AI developer needs to initiate his ML process to generate “I am OK, You are OK ” type AI if it is considered good for the society. This will be the “Due Diligence” of the AI developer.

This is different from the usual discussion on “Prevention of Bias” arising out of bad training data which has been flagged by the industry at present. We can call this Naavi’s theory of AI Behavioural Regulation.

When we are drawing up regulations for AI, the question is whether we need to mandate that the developer shall try to generate an “I’m OK-You’re OK” type and ban “I’m not Ok -you are not OK” or I am OK-You are not OK” type.

The regulatory suggestion should be that “I am OK-You are OK” is the due diligence. “I am not OK-You are not OK” is banned and the other two types are to be regulated in some form.

Birth Certificate for AI

Naavi has been on record stating that if we can stamp every AI algorithm with a owner’s stamp, it is like assigning the responsibility for behaviour to the creator and would go a long way to ensure a responsible AI society.

This can be achieved by providing that every AI needs to be mandatorily registered with a regulatory authority.

Just as we have a mandatory birth certificate for humans, there should be a mandatory “AI Activation Certificate” which is authorized by a regulator. It can also be accompanied by a “Death Certificate” equivalent of “AI deactivation certificate”. Unregistered AI should be banned from usage in the society like an “Illegal Currency”.

When the license is made transferable, it is like a minor being adopted by foster parents or a lady marrying and adopting a new family name and accordingly the change of environmental control on the AI algorithm is recognized and recorded.

Mandatory Registration coupled with a Development guideline on the I am OK-You are OK goal should be considered when India is trying to make a law for AI.

For the time being, I leave this as a thought for research and would like to add more thoughts as we go along.

Readers are welcome to add their thoughts.

Naavi

P.S: Background of Naavi

Naavi entered the realm of “Behavioural Science” some time in 1979-80 attending a training for Branch managers of IOB at Pune. The theme of the 6 day program for rural branch managers was “Transactional Analysis” and the faculty was one Dr Jasjit Singh. (Or was it Jaswant Singh?). Since then, “Transactional Analysis” and “Games people Play” of Dr Eric Berne and the concept of “I’m Ok You’re OK”, by Dr Thomas Harris and similar works have been of interest to me. Even earlier Professor Din Coly’s concepts of hypnotism has been of interest and which in more recent times motivated me to pursue a Certificate of hypnosis from California Institute of Hypnosis and finally linking up with Neuro Rights has been a journey of its own besides the Cyber Law-Privacy journey of Naavi that needs to be recalled.

Also see these videos