One of the major concerns of the society regarding AI is the “Dis-intermediation of human beings from the decision process”. There is the risk of AI system becoming sentient at some point of time in the future and will remain the long term risk.

In the mid term and short term AI is already posing risk of “Biased outputs due to faulty machine training ” “Automated Decision Making”, “Poisoning of Training models”, “Behavioural Manipulations”, “Neuro-Rights Violations” , ” Destruction of the Right of Choice of a human etc”.

One of the specific areas of concern is the development of large language models with the functionality of predicting the action of a user with a creative license. The creative license leads to “Hallucination” and “Rogue behaviour” of a LLM like ChatGPT or BARD and could create more problems when such software is embedded into humanoid robots.

Industrial robots on the other hand are less prone to such rogue behaviour on their own (except when they are hacked) since the creative license given to an industrial robot is less and they operate in the ANI area.

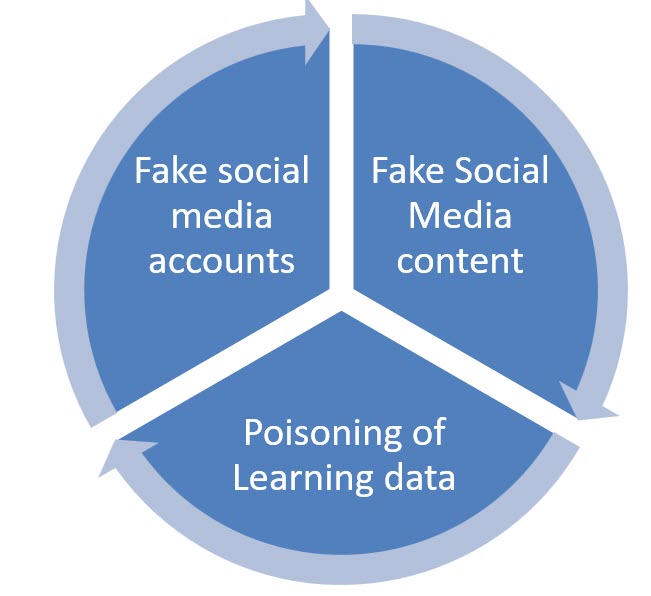

In India the use of AI to generate “Deep Fakes” and “Fake news ” is already in vogue. There is a large scale feeding of false data into the internet with the hope that it would poison the learning systems which parse information from the internet resources like websites, blogs, instagrams, X, etc. There are many declared and undeclared “Parody” accounts which boldly state falsehood which a casual observer may consider as true. The sole purpose of such accounts and content is to poison the learning systems that extract public data and incorporate it into news delivery systems. Many AI systems operate to generate content for such fake X accounts so that AI develops false information that further feeds back into the training data and generates further fake news.

Unfortunately the Indian Supreme Court dancing to the tune of anti national lobby frustrated the efforts of the Government to call out fake narrative even when such fake narrative is in the name of Ministries and Government departments.

The EU-AI act recognizes the risk of Generative AI and identifies them as a “High Risk” AI by underscoring “High Impact capabilities” and “Systemic risk at Union level”.

Even under prohibited AI practices, EU AI act includes such AI systems that deploy

“subliminal techniques beyond a person’s consciousness or purposefully manipulative or deceptive techniques, with the objective to or the effect of materially distorting a person’s or a group of persons’ behaviour by appreciably impairing the person’s ability to make an informed decision, thereby causing the person to take a decision that that person would not have otherwise taken in a manner that causes or is likely to cause that person, another person or group of persons significant harm;”

Many of the LLMs could be posing such risks through their predictive generation of content either as a text or speech. “Deepfake” per-se (for fun) may not be classified as “High Risk” under the EU AI act but tagged with the usage, deep fake can be considered as “High Risk” or “Prohibited Risk”.

Title VIIIA specifically addresses General Purpose AI models. The compliance measures related to these impact the developers more than the deployers deployers would be relying upon he conformity assessment assurances given by the developers.

In respect of AI systems which are already in the market and have not been classified as high risk AI systems but are modified by an intermediary to be considered as a high risk AI system, the intermediary will himself be considered as the “provider” (developer) .

1.A general purpose AI model shall be classified as general-purpose AI model with systemic risk if it meets any of the following criteria:

(a) it has high impact capabilities evaluated on the basis of appropriate technical tools and methodologies, including indicators and benchmarks;

(b) based on a decision of the Commission, ex officio or following a qualified alert by the scientific panel that a general purpose AI model has capabilities or impact equivalent to those of point (a).

2.A general purpose AI model shall be presumed to have high impact capabilities pursuant to point a) of paragraph 1 when the cumulative amount of compute used for its training measured in floating point operations (FLOPs) is greater than 10^25.*

According to Article 52(b)

Where a general purpose AI model meets the requirements referred to in points (a) of Article 52a(1), the relevant provider shall notify the Commission without delay and in any event within 2 weeks after those requirements are met or it becomes known that these requirements will be met. That notification shall include the information necessary to demonstrate that the relevant requirements have been met. If the Commission becomes aware of a general purpose AI model presenting systemic risks of which it has not been notified, it may decide to designate it as a model with systemic risk.

The Commission shall ensure that a list of general purpose AI models with systemic risk is published and shall keep that list up to date, without prejudice to the need to respect and protect intellectual property rights and confidential business information or trade secrets in accordance with Union and national law.

Article 52c provides the obligations for providers of general purpose AI models which may be relevant to such providers and the persons who build their products on top of such products and market under their brand name. (We are skipping further discussion on this since we are focussing on the user’s compliance requirement for the time being).

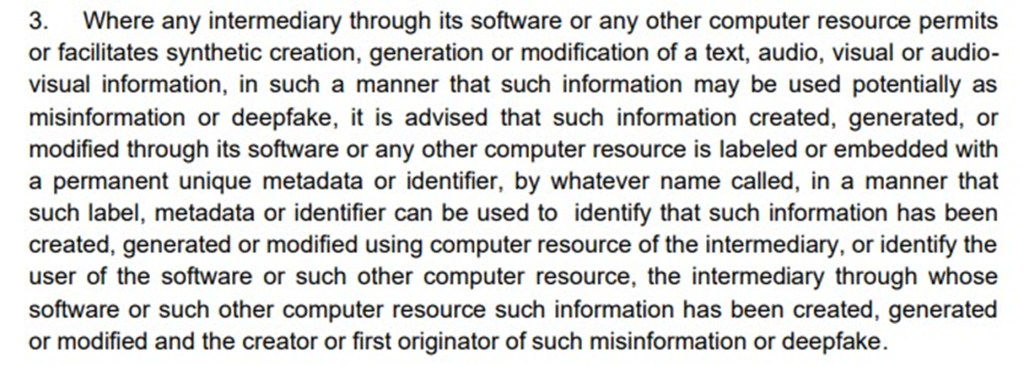

It may however be noted that the MeitY advisory of March 4th reproduced below also requires notification to Meity and registration of the person accountable for the Generative AI software.

This notification has been made under ITA 2000 as an Intermediary guideline treating the deployer of the AI as an intermediary.

Naavi

(More to follow…)

Reference: *

A floating-point operation is any mathematical operation (such as +, -, *, /) or assignment that involves floating-point numbers (as opposed to binary integer operations).

Floating-point numbers have decimal points in them. The number 2.0 is a floating-point number because it has a decimal in it. The number 2 (without a decimal point) is a binary integer.

Floating-point operations involve floating-point numbers and typically take longer to execute than simple binary integer operations. For this reason, most embedded applications avoid wide-spread usage of floating-point math in favor of faster, smaller integer operations.