The demand for “Transparency” in processing of personal data is part of every data protection laws. As an associated concept, most data protection laws also mandate that there shall be no automated decision making.

As the technology develops, many organizations use AI/ML algorithms that are opaque about how data is processed within the algorithm. The algorithms may also be property of third party service providers which data controllers may use.

Some of the service providers who provide their service under the SaaS model operate as “Joint Controllers” and not as “Processors” since they would not like to share the “Means of Processing” with the Data Controllers. They commit on the end result and hold the processing in confidence as their trade secret or protected intellectual property.

Many data protection laws allow for masking of information from normal disclosure requirements for reasons of protection of trade secrets.

In this environment, there are a few thoughts that are emerging about “Algorithmic transparency”.

Algorithmic transparency is the principle that the factors that influence the decisions made by algorithms should be visible, or transparent, to the people who use, regulate, and are affected by systems that employ those algorithms.

Under this principle, the inputs to the algorithm and the algorithm’s use itself must be known, and they need not be fair. The organizations that use algorithms must be also accountable for the decisions made by those algorithms, even though the decisions are being made by a machine, and not by a human being.

The concept of “Algorithmic accountability” raises a question about the protection of trade secrets involved in development of such algorithms which may be the core element of many start ups.

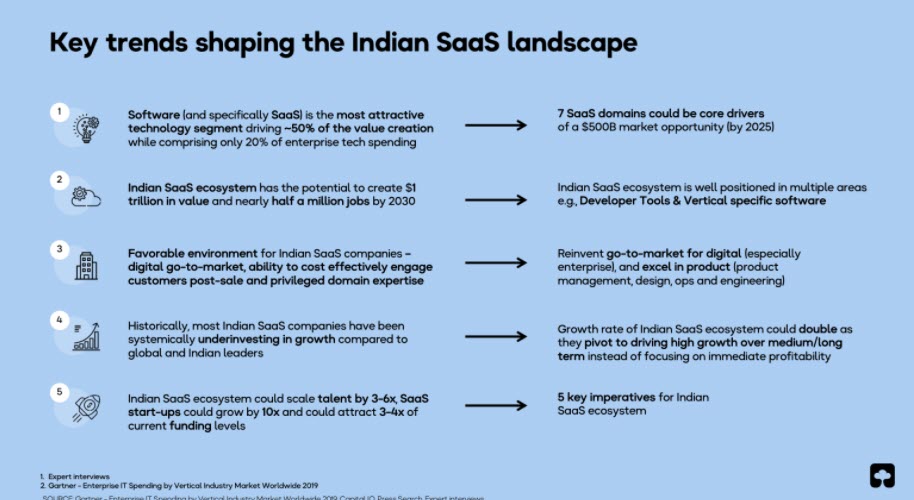

Recently, a study by Saasbhoomi.com has come up with a finding that Indian Saas ecosystem has the potential to create $ 1 trillion in value and nearly half a million jobs by 2030

If however the Saas ecosystem has to realize its full potential, it has to wade through the challenges posed by the emerging concept of “Algorithmic Transparency” in privacy laws.

Though most data protection regulations do respect the presence of “Trade Secrets” in business and accommodate some flexibility in application of transparency norms, the issue may gather momentum in the coming days through Privacy Activism.

The old Canadian Privacy law PIPEDA already spoke of the need for Algorithmic transparency and indicated in its model code that Transparency in the context of Privacy requires algorithmic transparency. It said that Consumers would now have the right to require an organization to explain how an automated decision-making system made a prediction, recommendation or decision.

Even in UK where there is a competitive privacy activism against GDPR, there are discussions on Algorithmic transparency. One of the recent articles in blog of the Center for Data Ethics and Innovation recommended that the government should place a mandatory transparency obligation on all public sector organisations using algorithms when making significant decisions affecting individuals requiring the proactive publication of information about the algorithms. It will not be long before activists take up the idea and start pushing the concept in the private sector also.

If innovation has to be nurtured, there needs to be an appropriate regulation which does not mandate disclosure of what the Saas developers would consider as their proprietary information.

If however the Saas developers tend to ignore the privacy regulations, there will be ground for the Privacy activists to push hard for algorithmic transparency. This could lead to a new round of conflicts between the IPR supporters and the Privacy supporters. Considering the attitude of some of the supervisory authorities in EU and the EUCJ itself, it will not be surprising if some of the Supervisory authorities may start ruling in favour of algorithmic transparency.

We hope that the upcoming Indian data protection law recognizes the need for encouraging innovation by supporting some level of confidentiality of the trade secrets which include the way algorithms process personal data.

If there is a need for balancing of the demand for algorithmic transparency with the disclosure of automated processing, the Indian authorities may consider using the Sandbox system and demand that the information about the processing of personal data by algorithms may be escrowed in confidence with the Data Protection Authority and protected by the Copyright laws. In the recent days Indian Patent authorities have been liberal in interpreting the “Software” for patent and have provided patent for essentially software operations. Some algorithm creators may use Patent to protect their innovations and if so it may satisfy the privacy activists. Probably the sandbox system will come to assist the patent applicants during the time the patent application remains in contention before approval.

Naavi